Overview of builtin activation functions¶

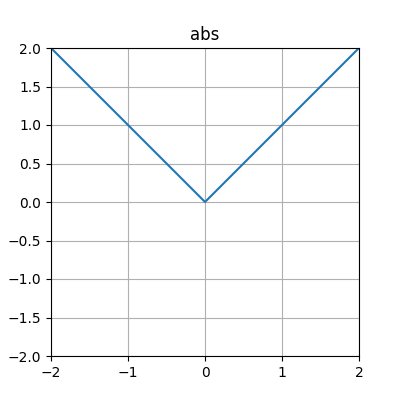

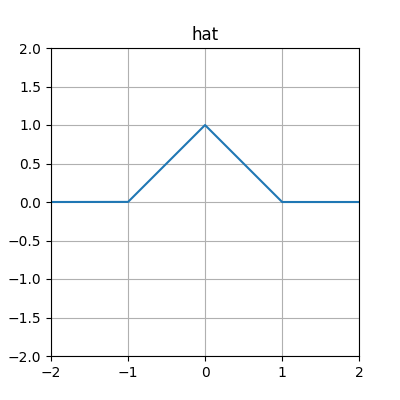

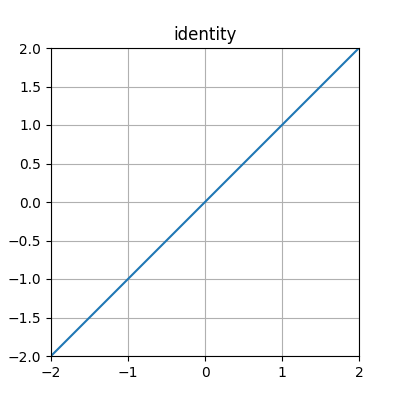

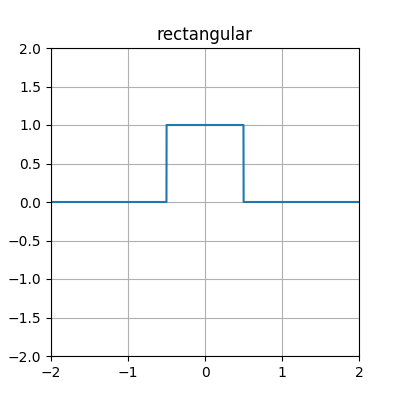

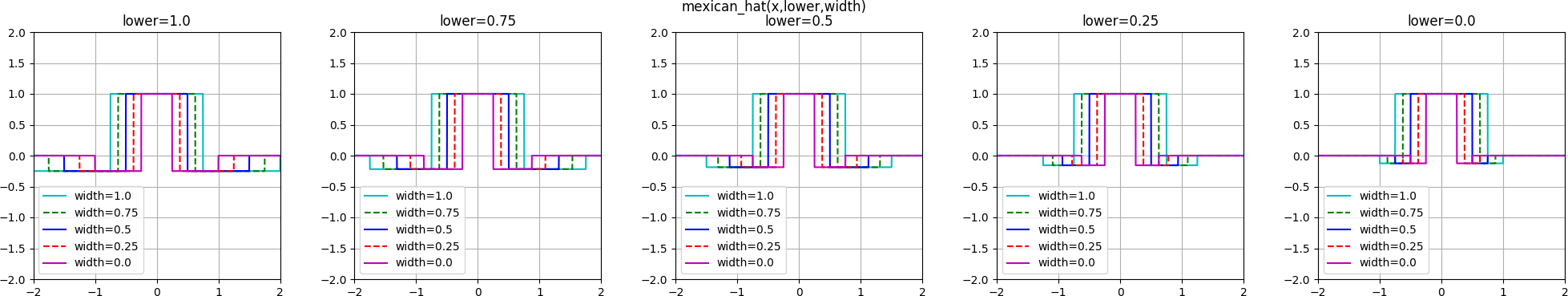

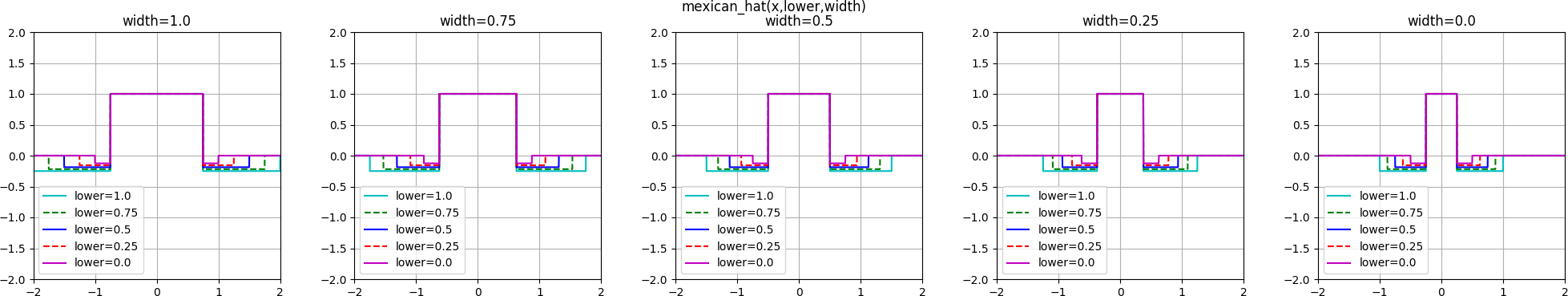

Note that some of these functions are scaled differently from the canonical versions you may be familiar with. The intention of the scaling is to place more of the functions’ “interesting” behavior in the region \(\left[-1, 1\right] \times \left[-1, 1\right]\). Some of these are more intended for CPPNs (e.g., for HyperNEAT - the mexican_hat function is specifically intended for seeding HyperNEAT weight determination, for instance) than for “direct” problem-solving, as noted below; however, even those originally meant mainly for CPPNs can be of use elsewhere - abs and hat can both solve the xor example task in one generation, for instance (although note for the former that it is included in several others such as multiparam_relu).

The implementations of these functions can be found in the activations module.

The multiparameter functions below, and some of the others, are new; if you wish to try substituting them for previously-used activation functions, the following are suggested as possibilities among the multiparameter functions:

| Old | New | Notes |

|---|---|---|

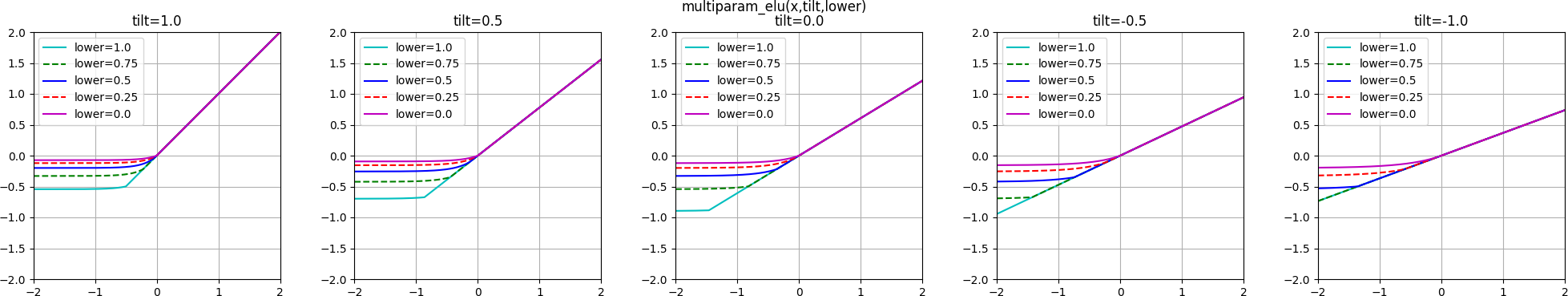

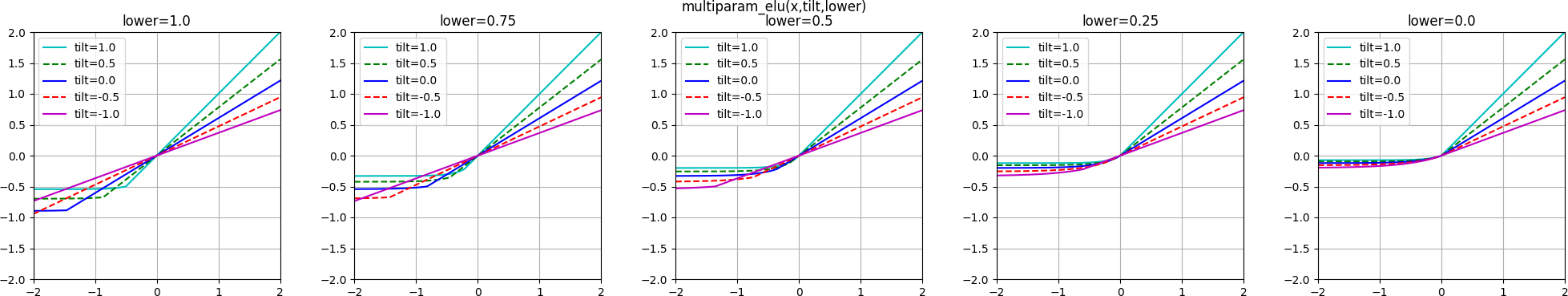

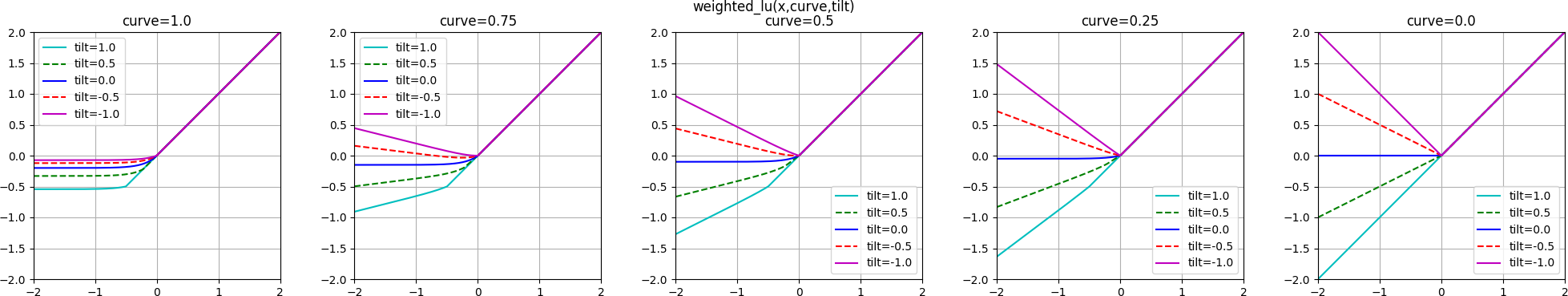

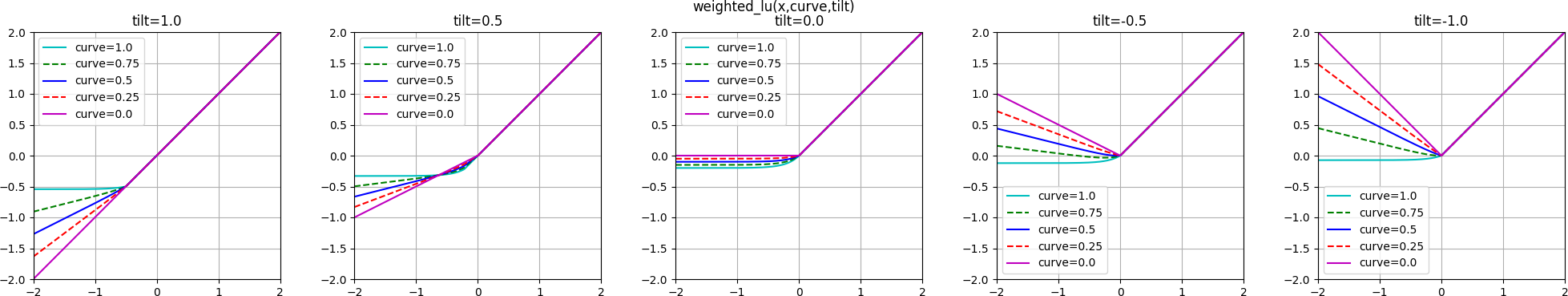

| abs | multiparam_relu, multiparam_relu_softplus, or weighted_lu | If the result does not need to be positive |

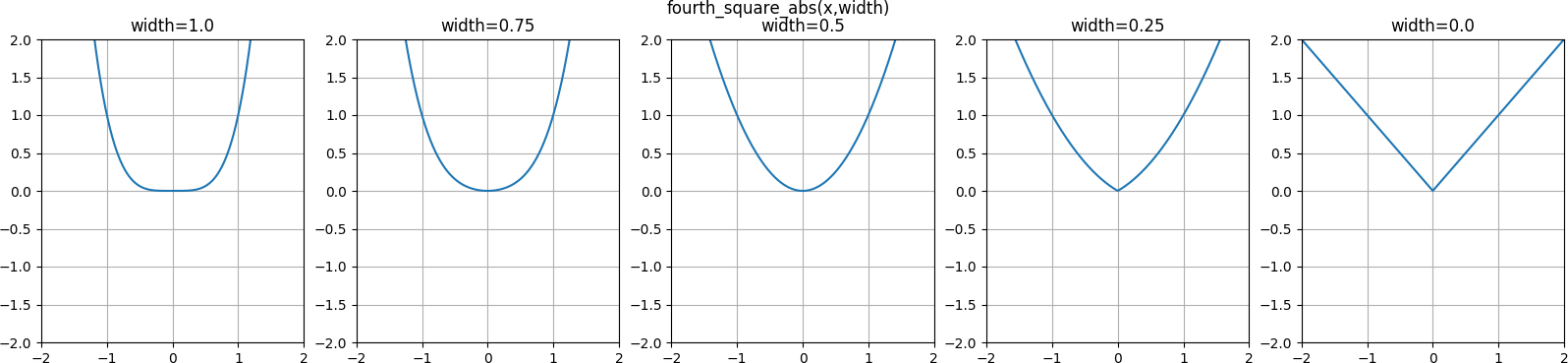

| abs | fourth_square_abs | If the result needs to be positive |

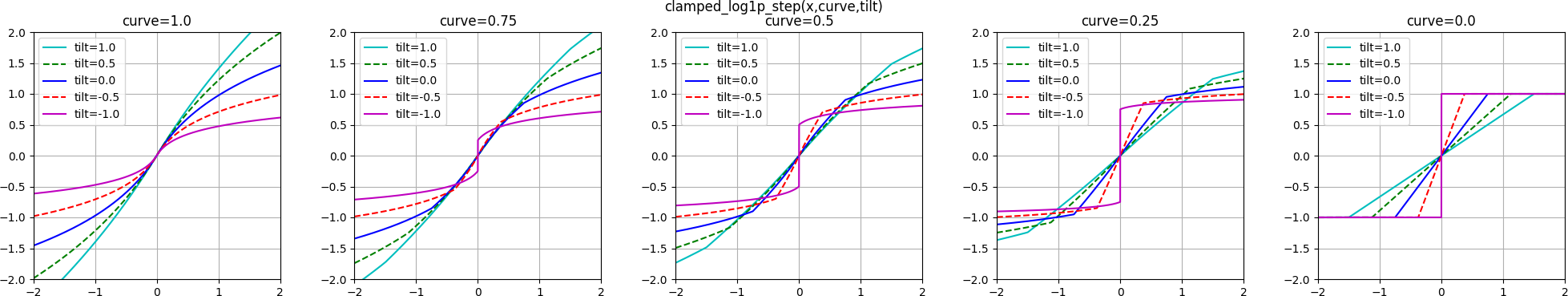

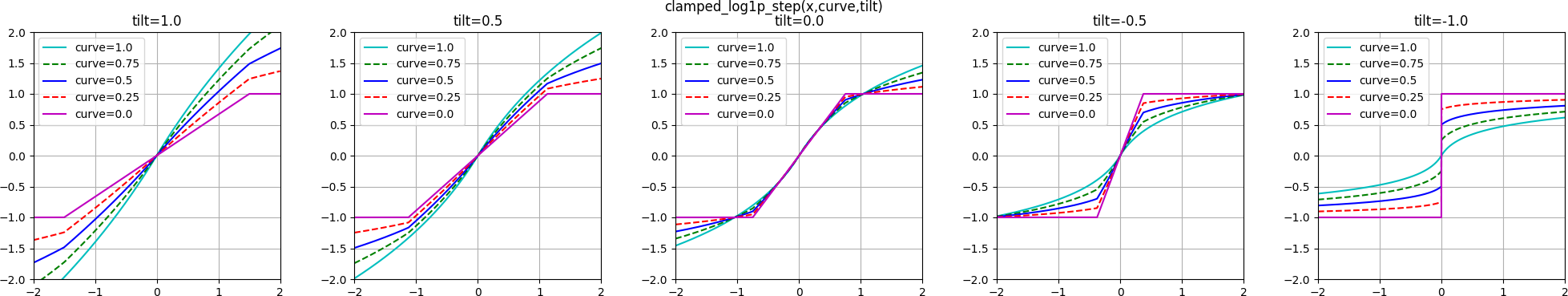

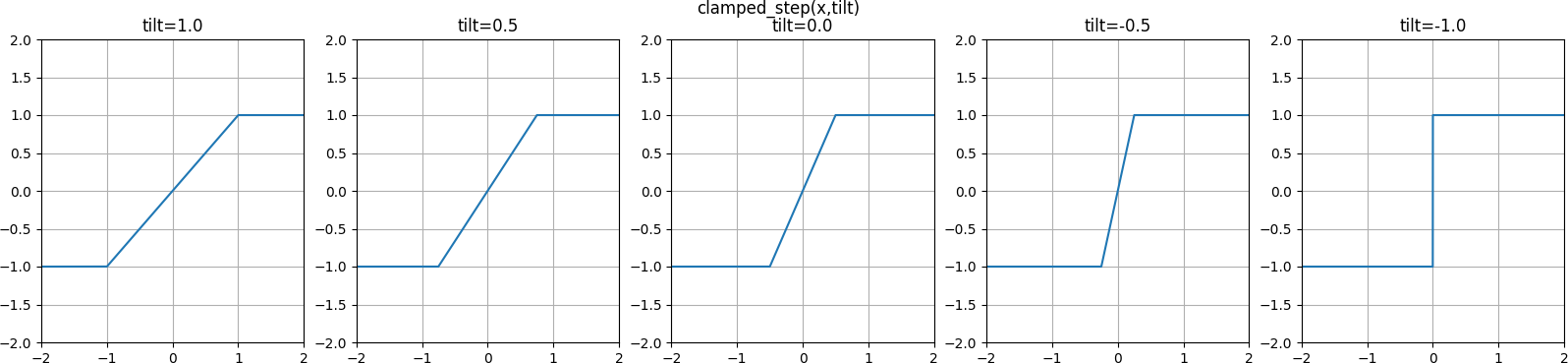

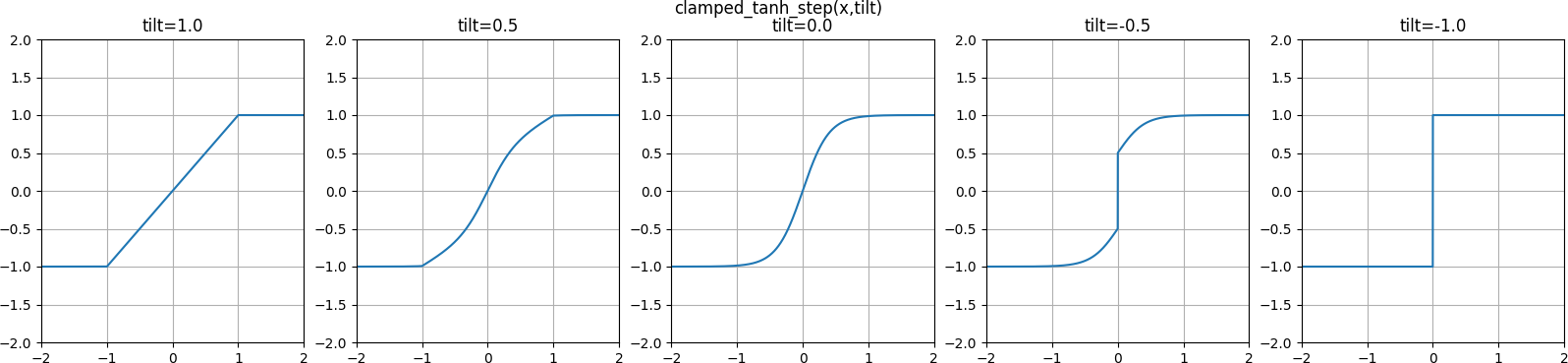

| clamped | clamped_tanh_step or clamped_step | |

| cube | multiparam_pow | |

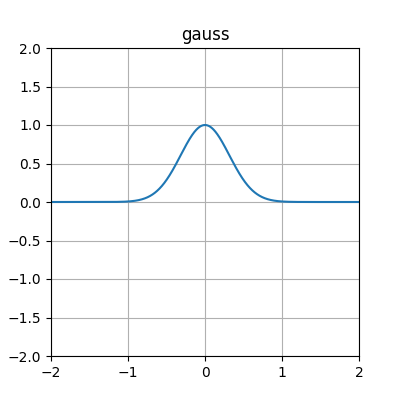

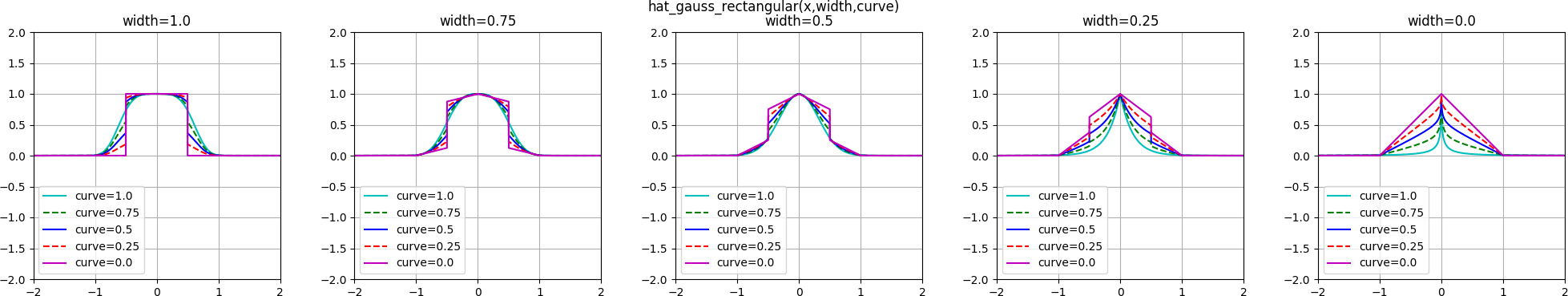

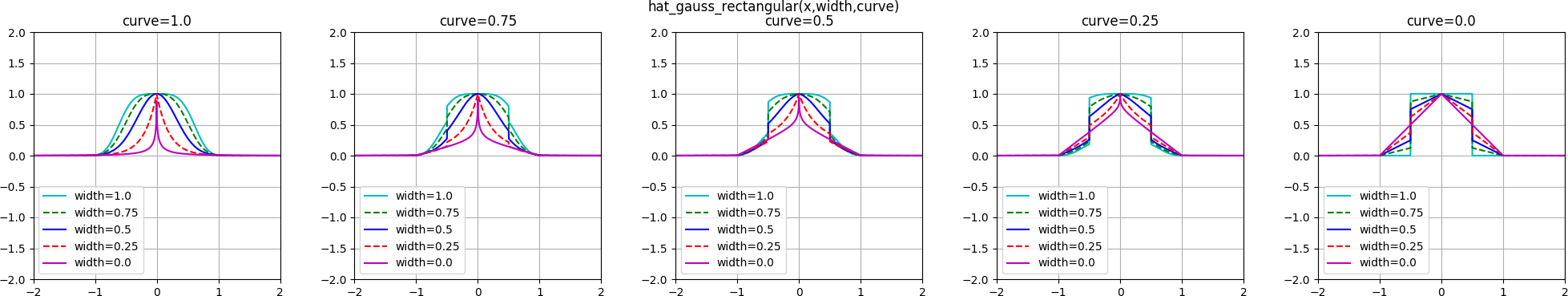

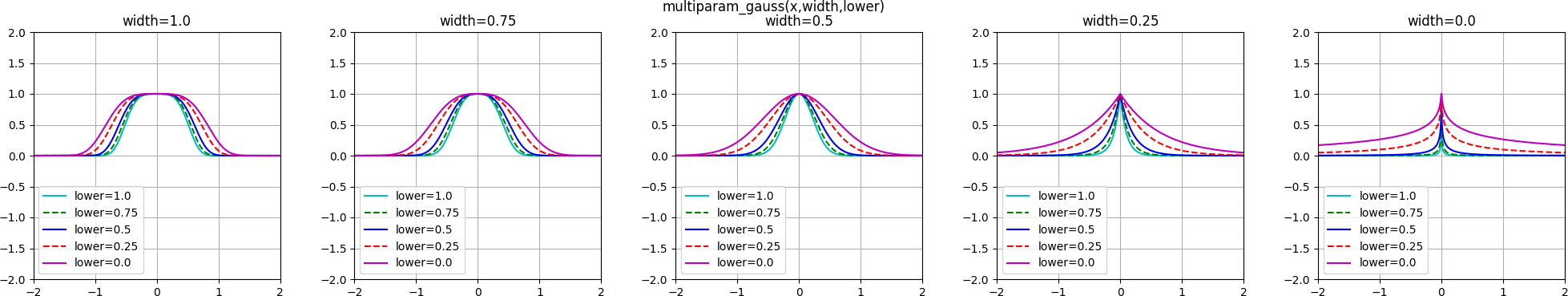

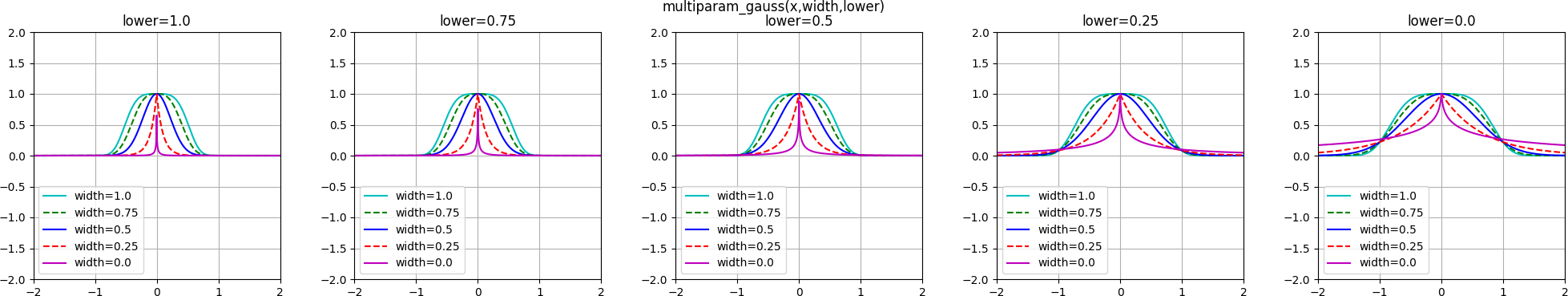

| gauss | hat_gauss_rectangular or multiparam_gauss | Either is suitable for seeding a HyperNEAT-LEO CPPN |

| hat | hat_gauss_rectangular | |

| identity | multiparam_relu, multiparam_relu_softplus, multiparam_pow, or weighted_lu | |

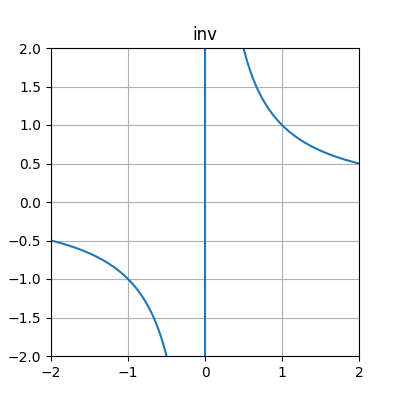

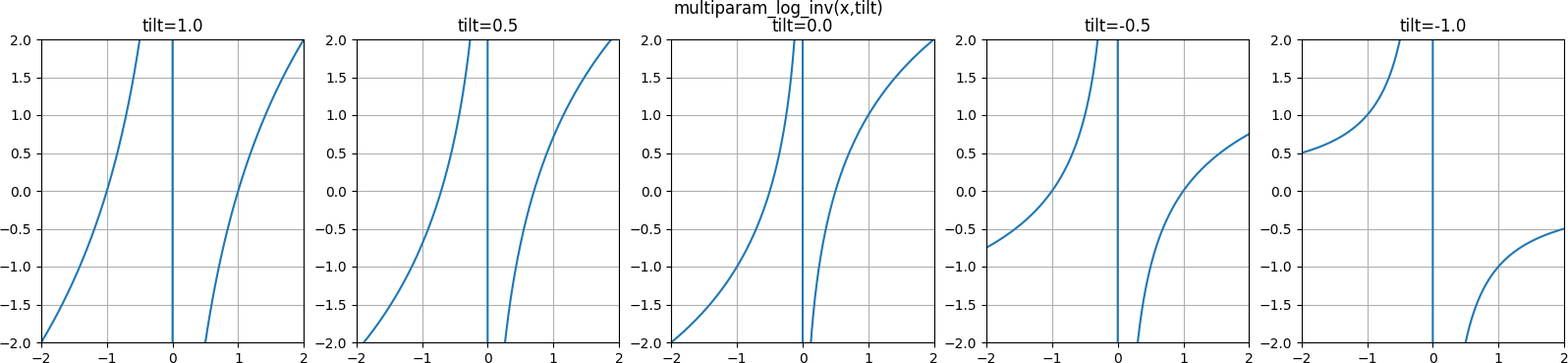

| inv | multiparam_log_inv | If for a CPPN |

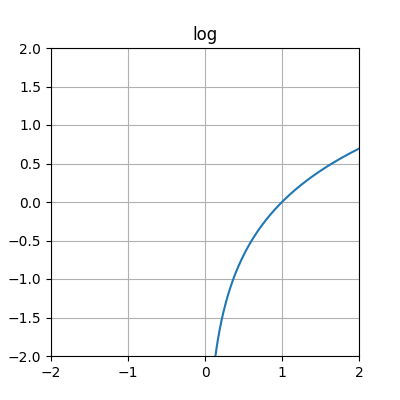

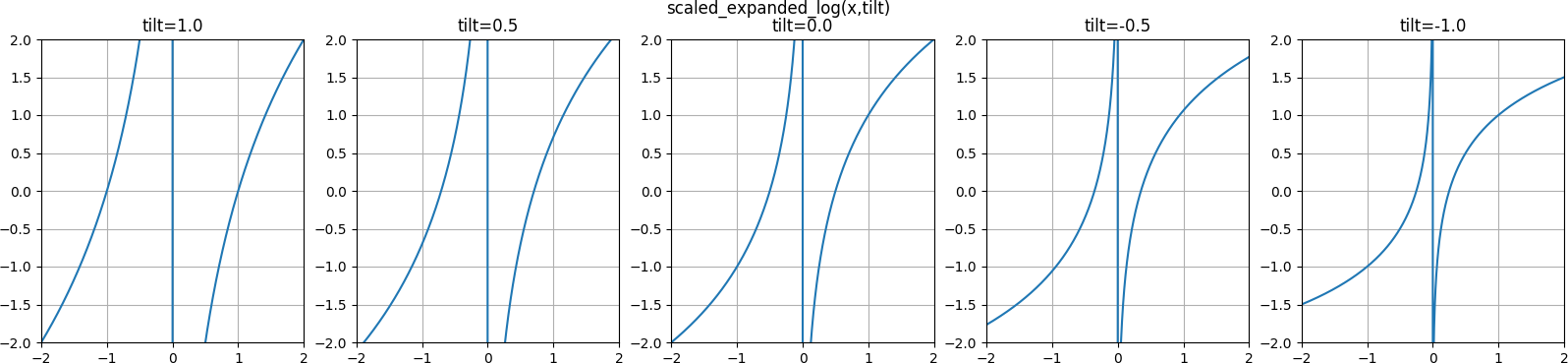

| log | scaled_expanded_log or multiparam_log_inv | If for a CPPN |

| relu | multiparam_relu, multiparam_relu_softplus, or weighted_lu | If the result does not need to be positive |

| relu | multiparam_softplus | If the result needs to be positive |

| sigmoid | multiparam_sigmoid | |

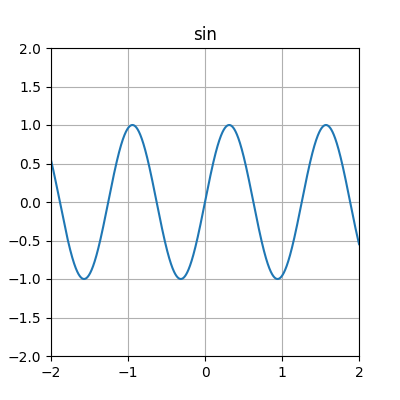

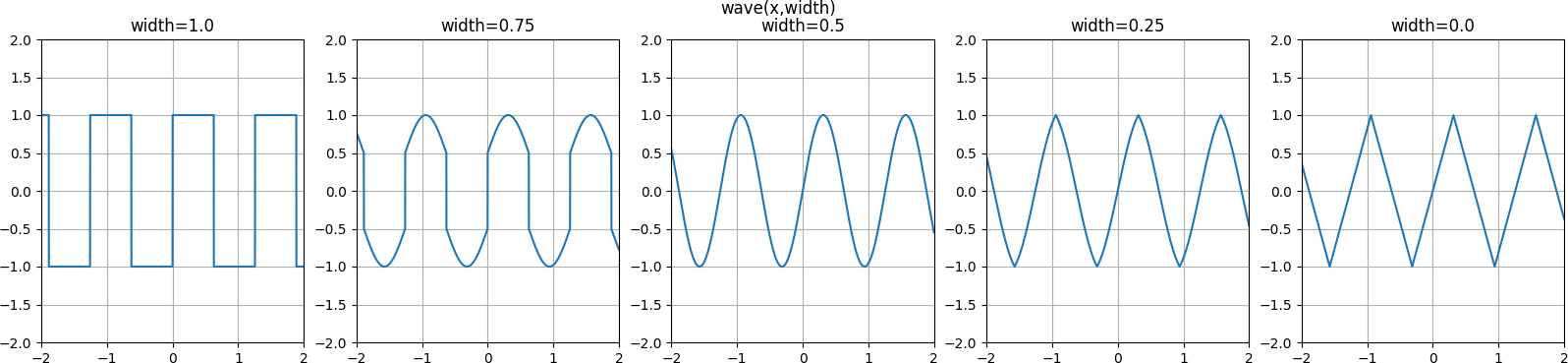

| sin | wave | |

| softplus | multiparam_relu_softplus | If the result does not need to be positive |

| softplus | multiparam_softplus | If the result needs to be positive |

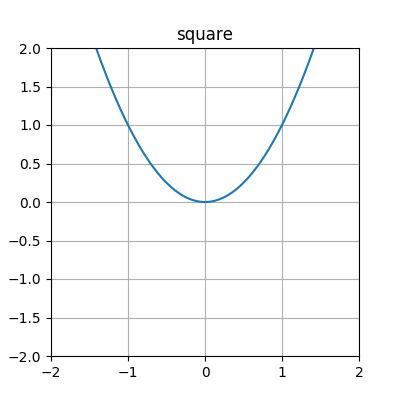

| square | multiparam_pow | If the result does not need to be positive |

| square | fourth_square_abs | If the result needs to be positive |

| tanh | clamped_tanh_step | If the result needs to be within [-1, 1] |

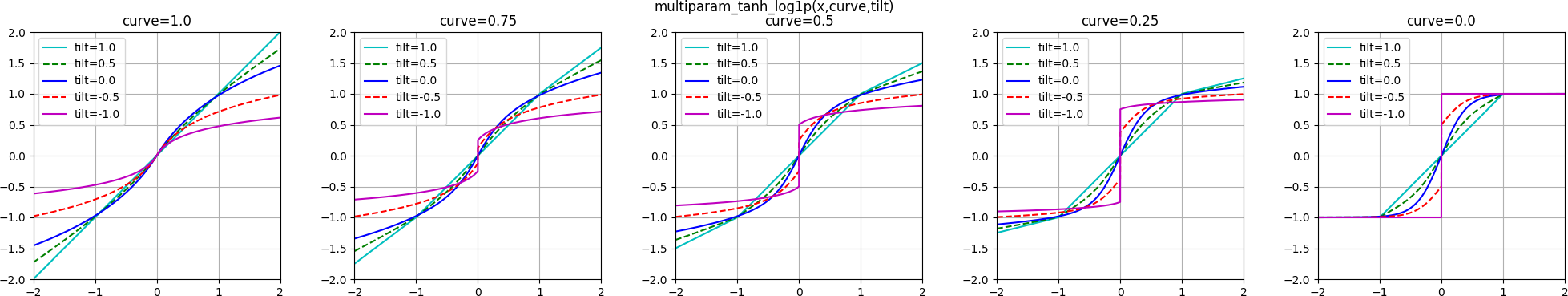

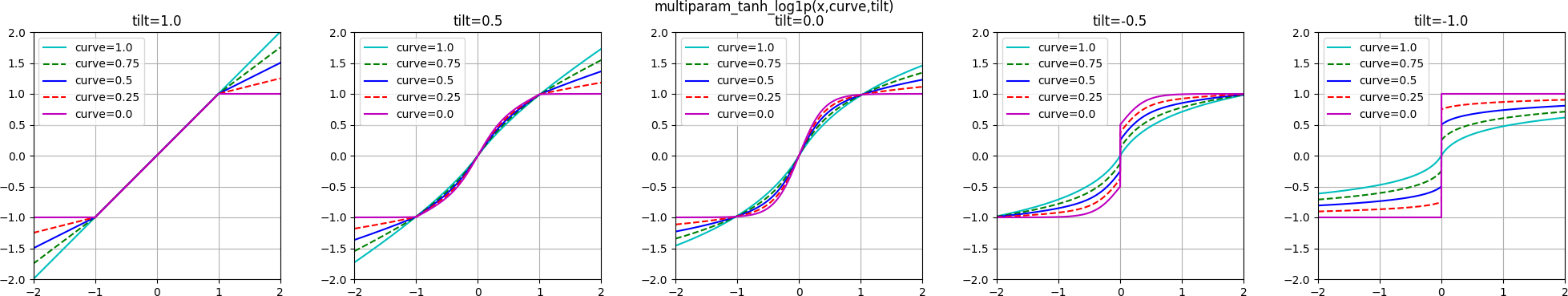

| tanh | multiparam_tanh_log1p | If it does not need to be within [-1, 1] |

The builtin multiparameter functions are also present to serve as examples of how to construct and configure new such functions.

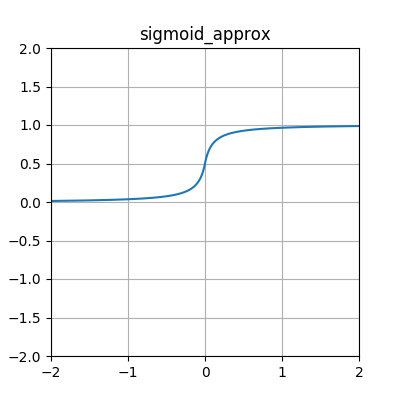

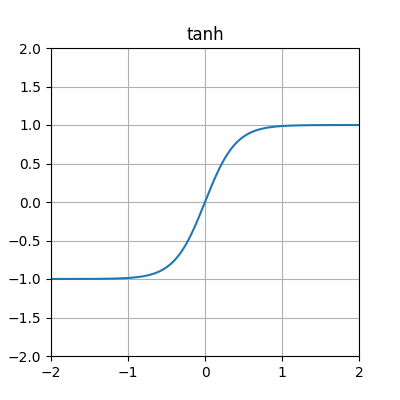

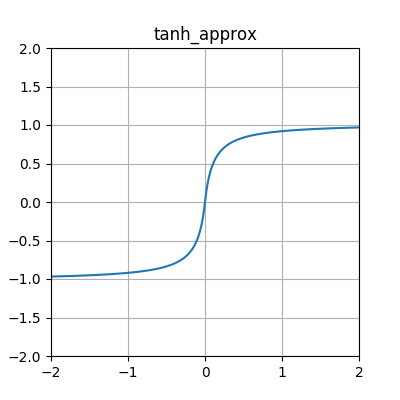

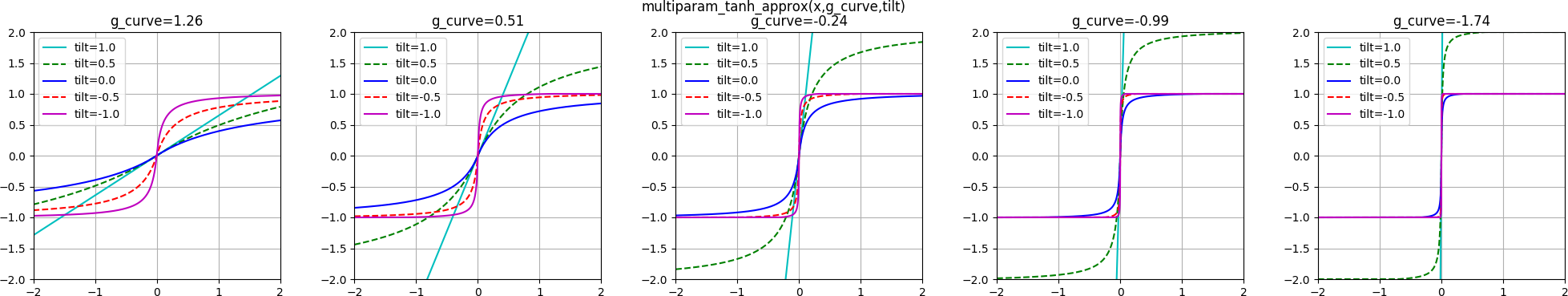

Two potentially-faster approximations of the sigmoid and tanh functions have also been added, namely sigmoid_approx and tanh_approx.

General-use activation functions (single-parameter)¶

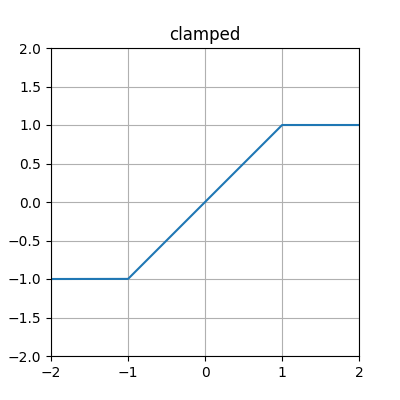

clamped¶

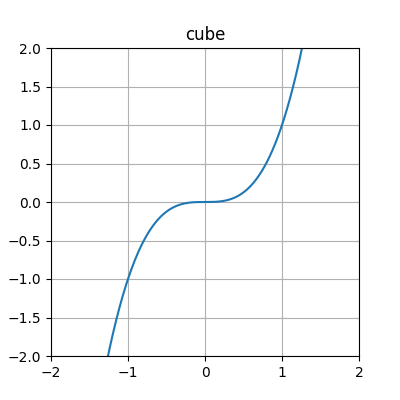

cube¶

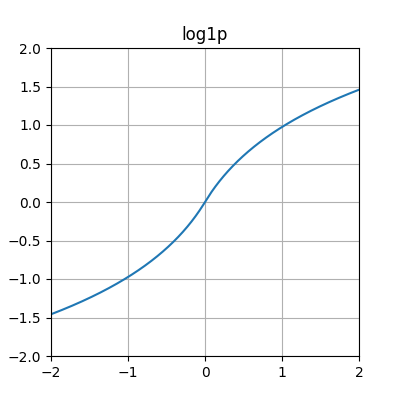

log1p¶

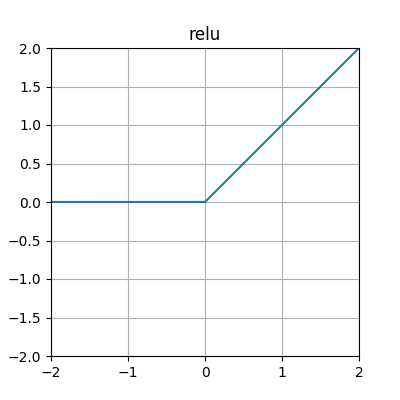

relu¶

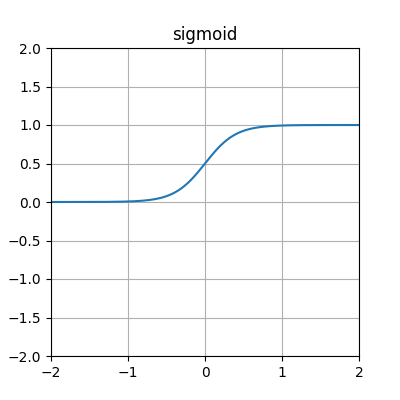

sigmoid¶

sigmoid_approx¶

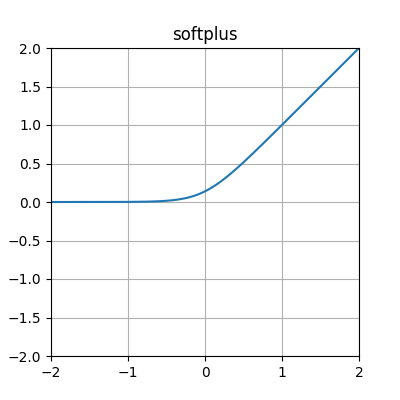

softplus¶

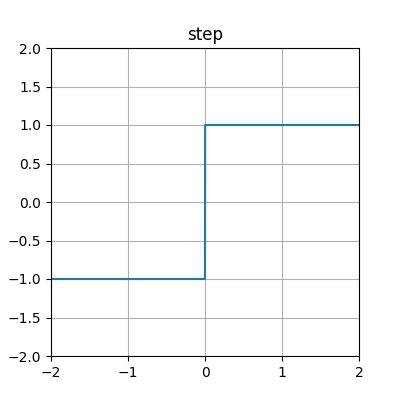

step¶

tanh¶

tanh_approx¶

General-use activation functions (multiparameter)¶

clamped_step¶

clamped_tanh_step¶

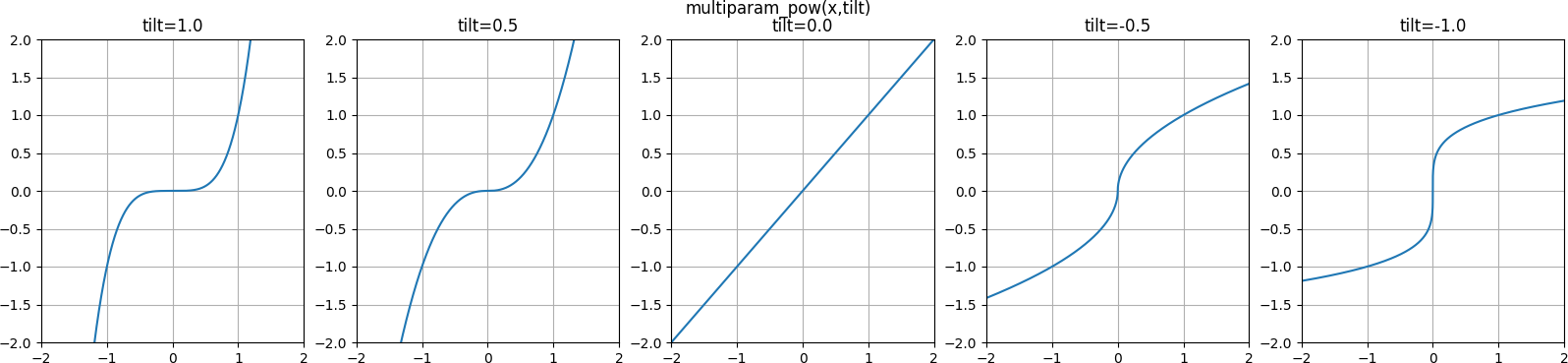

multiparam_pow¶

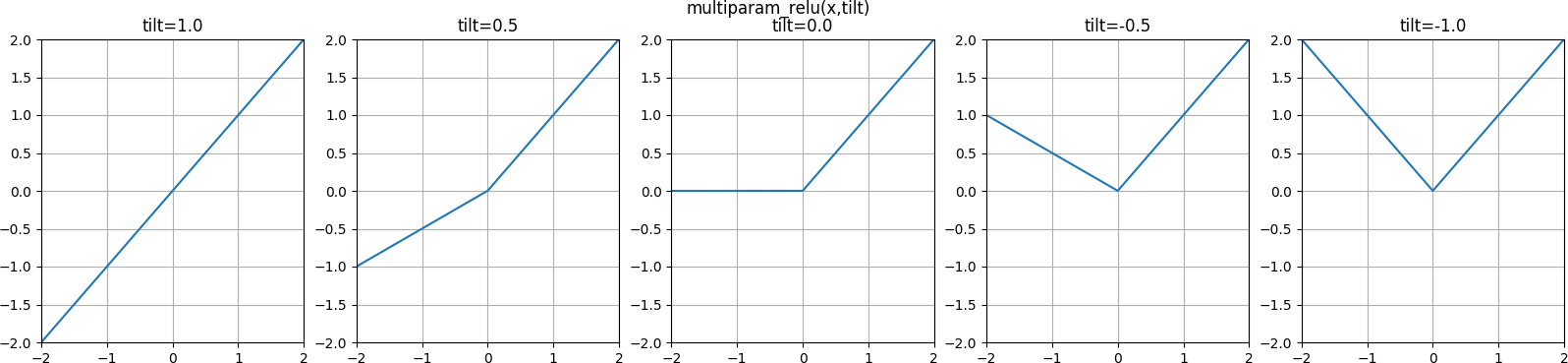

multiparam_relu¶

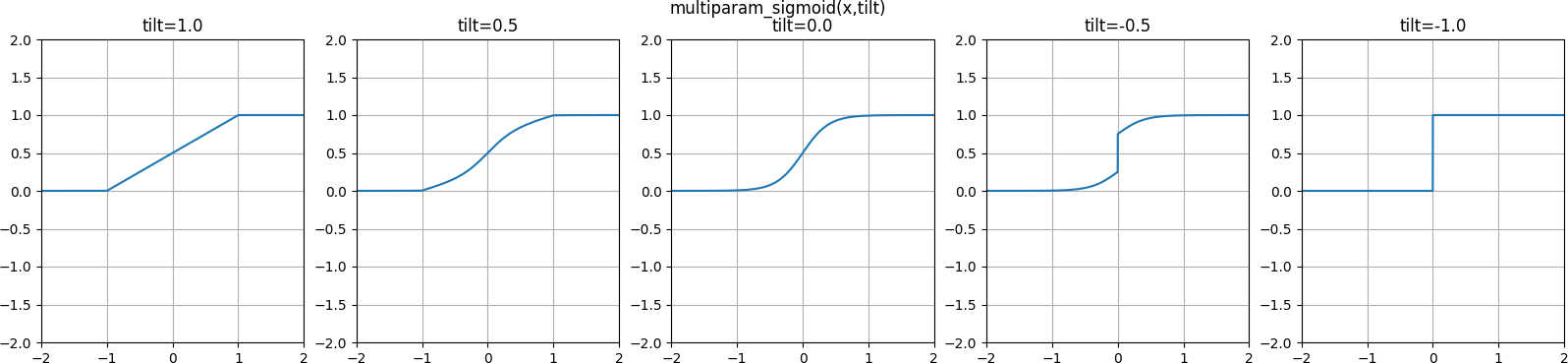

multiparam_sigmoid¶

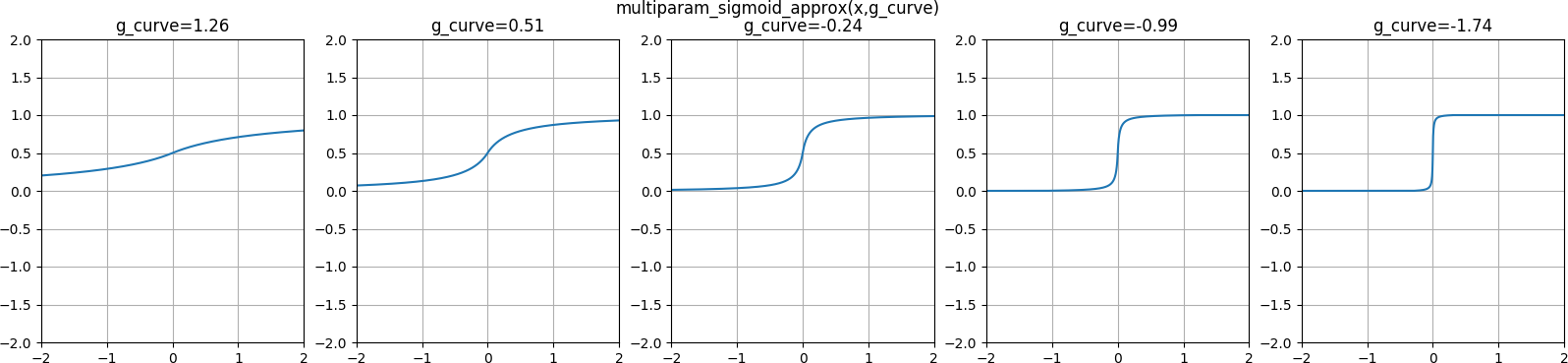

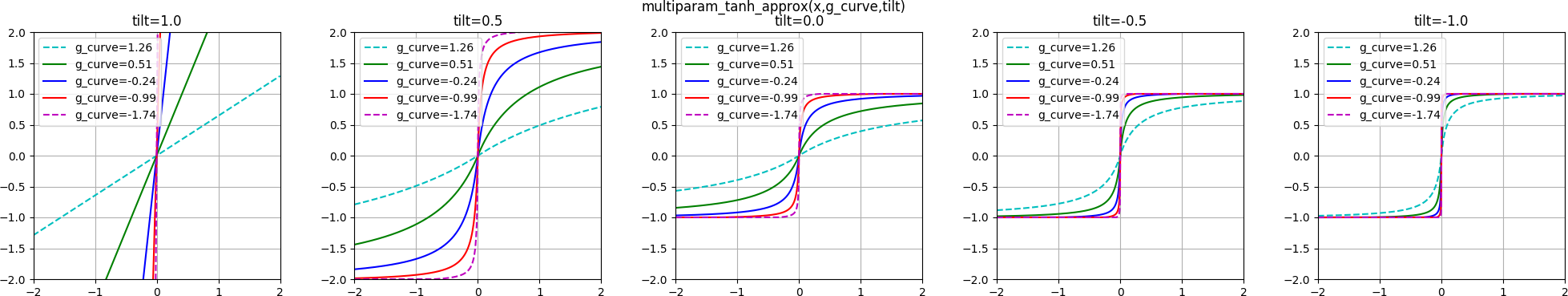

multiparam_sigmoid_approx¶

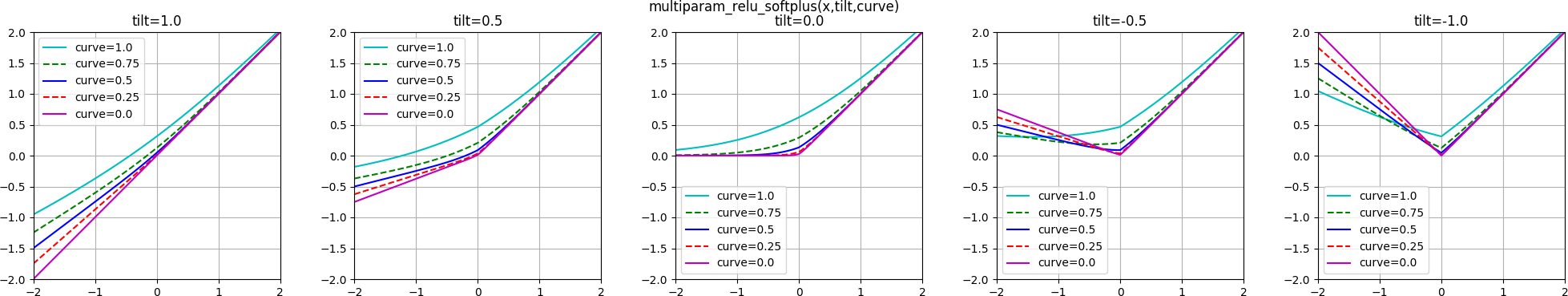

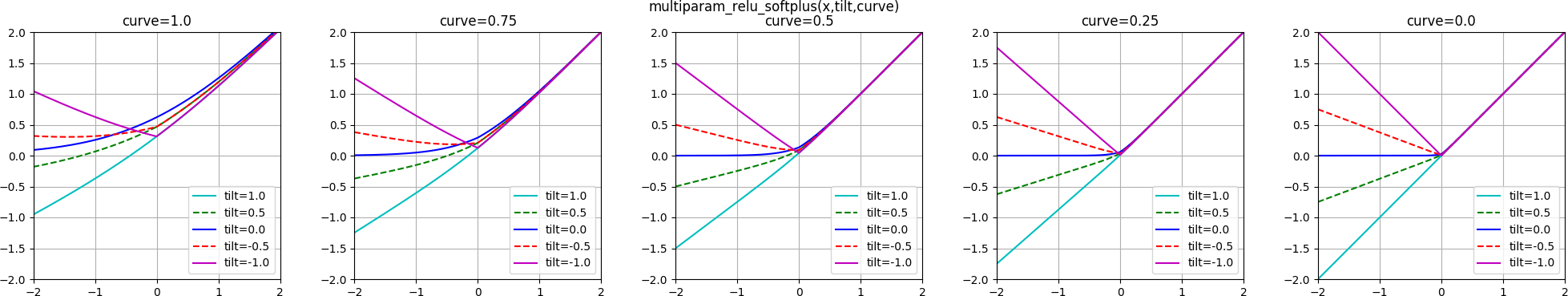

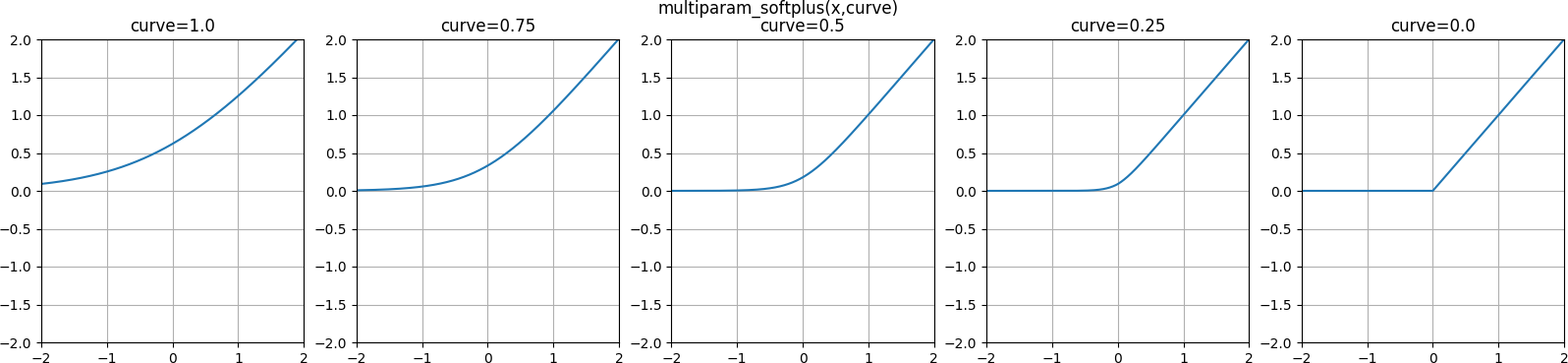

multiparam_softplus¶

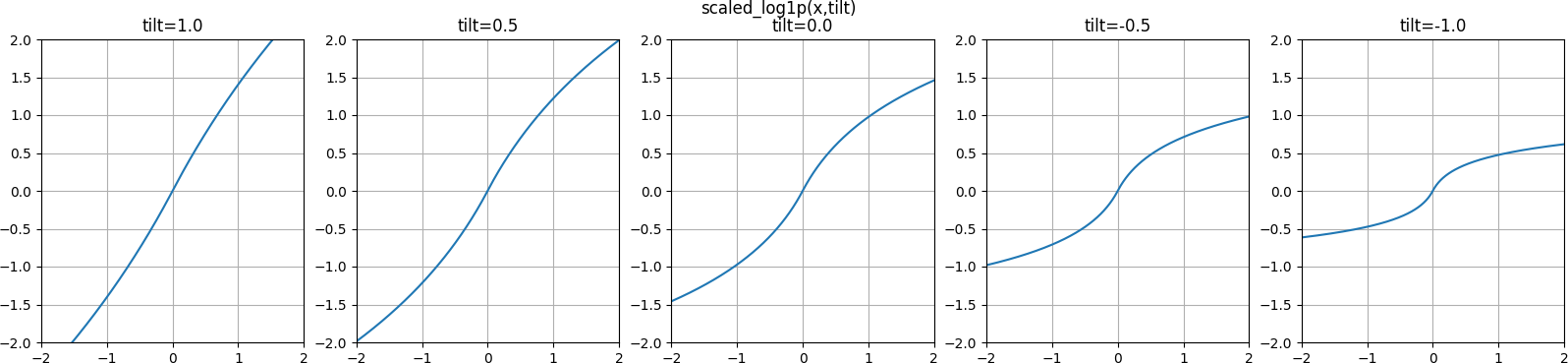

scaled_log1p¶

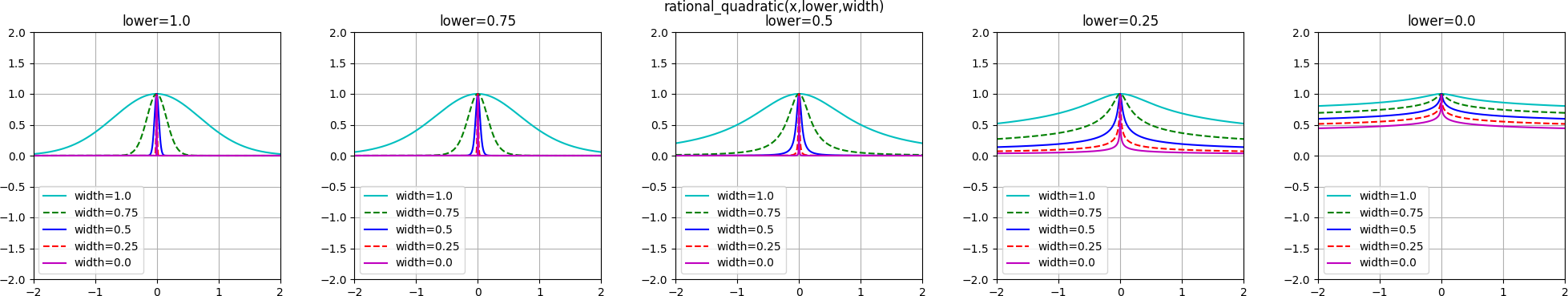

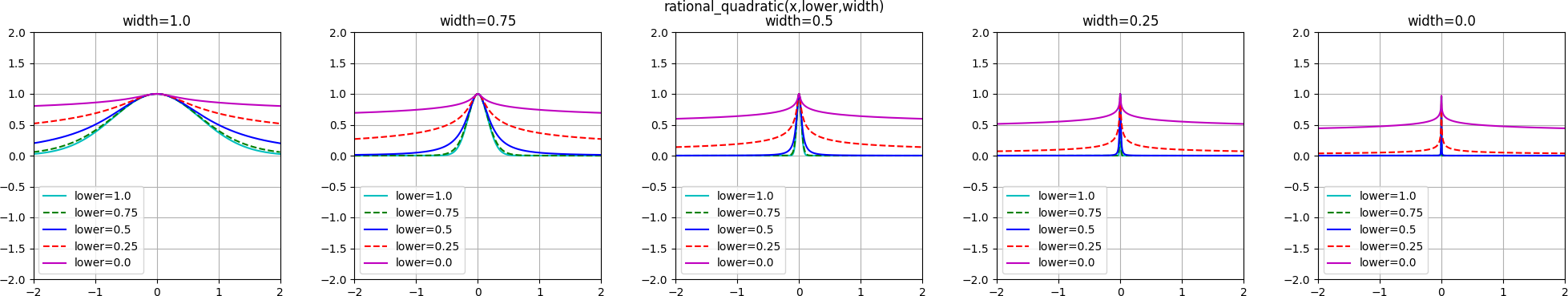

CPPN-intended activation functions (multiparameter)¶

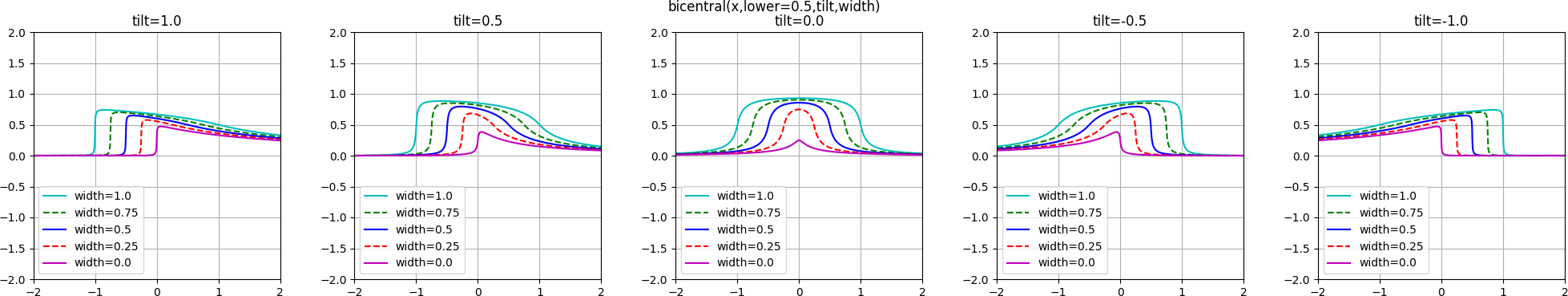

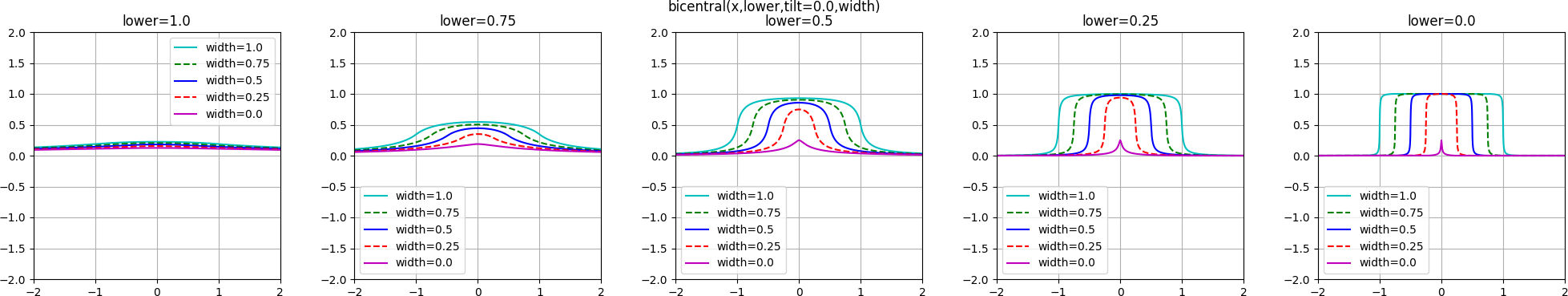

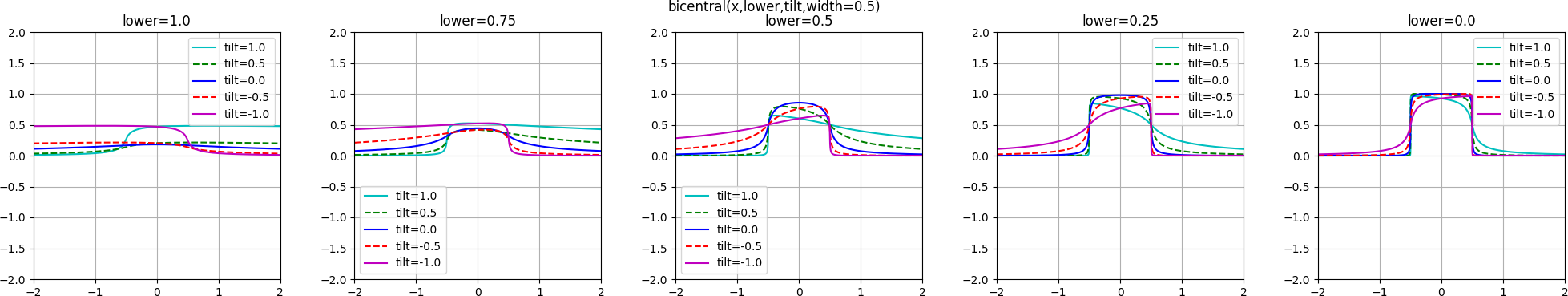

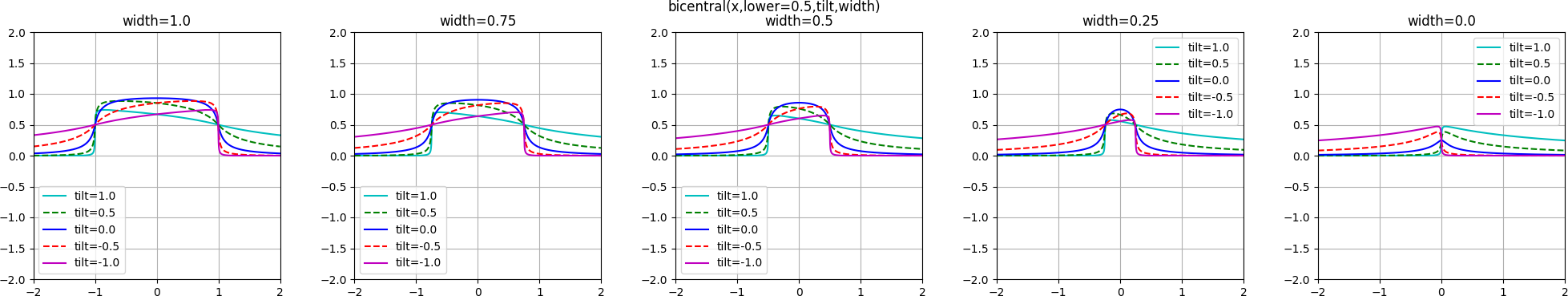

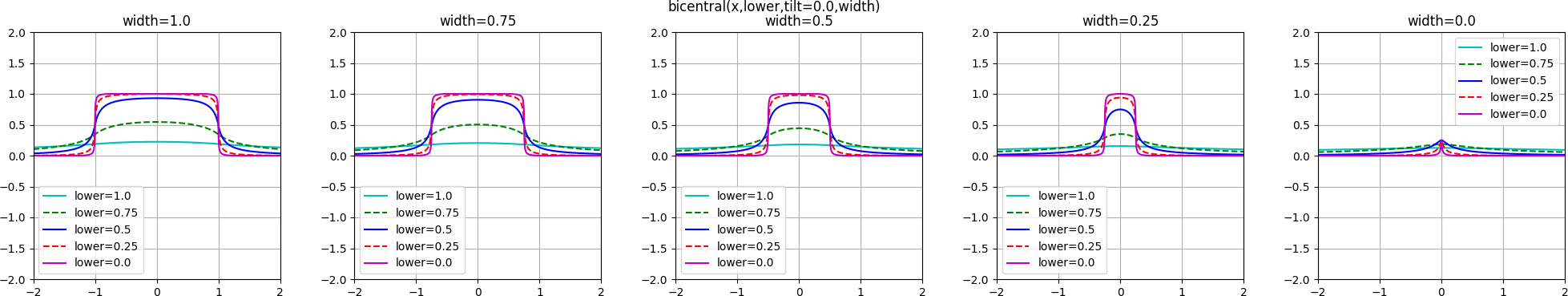

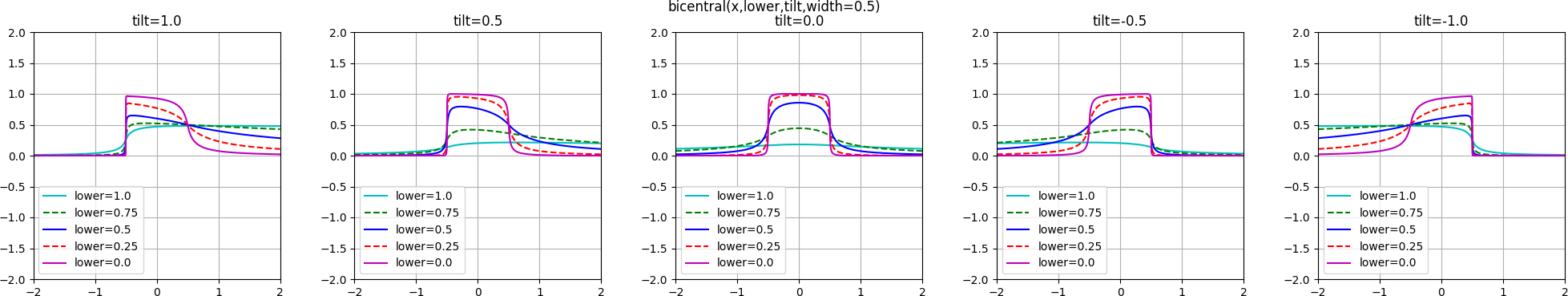

bicentral¶

This combination of two sigmoid functions is taken from the article “Taxonomy of neural transfer functions” [taxonomy], with adjustments.

CPPN-use activation functions (single-parameter)¶

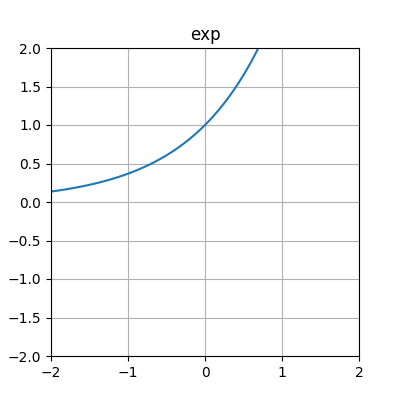

exp¶

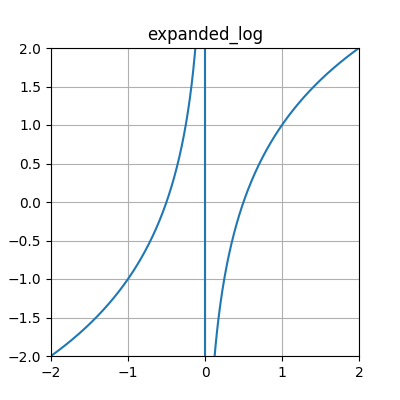

expanded_log¶

inv¶

log¶

sin¶

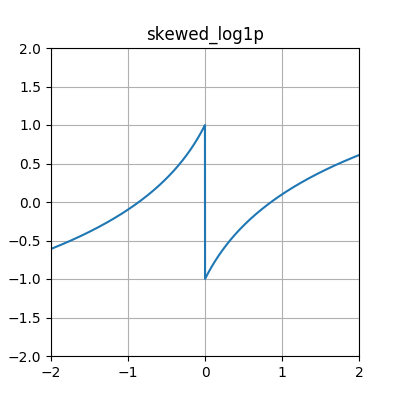

skewed_log1p¶

square¶

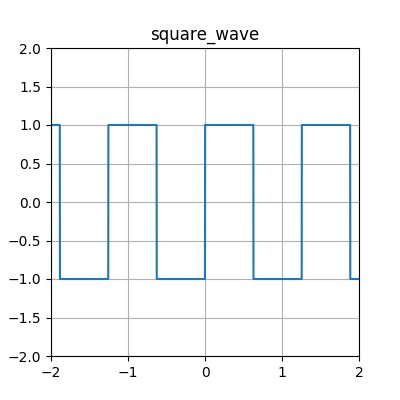

square_wave¶

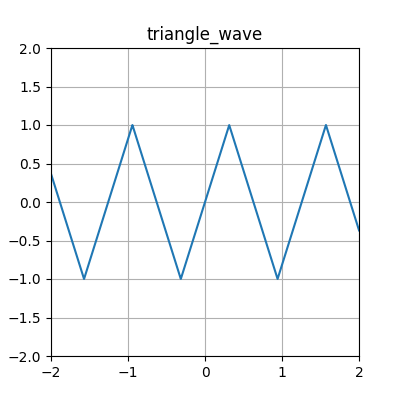

triangle_wave¶

CPPN-use activation functions (multiparameter)¶

fourth_square_abs¶

multiparam_log_inv¶

scaled_expanded_log¶

wave¶

| [taxonomy] | Duch, Włodzisław; Jankowski, Norbert. 2000. Taxonomy of neural transfer functions. ICJNN 2000. CiteSeerX Link. |